Linux

- Getting Started

- Linux on Chromebooks

- XPS 9310

- Bash

- SSH Configuration

- Configuring SSHD Authentication

- Enabling Google 2FA

- User Administration

- Yubikey SSH Authentication

- Tunneling

- System Admin

- Configure FTP

- Configure Postfix

- Configuring Multi-boot Filesystems

- Crontab

- Server Hostname

- Swap Allocation

- Synchronizing Time Using NTP

- Systemd Services

- Unattended Upgrades

- Interfaces

- Distributions

- Customization

- Kernel Management

- GRUB

- VirtualBox

- Boot Process

Getting Started

Overview

This page should contain the basic information needed to anyone starting out using a Linux server. Much of this information has been compiled from other guides, but I have rewritten and reformatted the content to be more readily available. Some of the content here was referenced from sources such as the Linux Filesystem Hierarchy Standard (Linux FHS).

Basics

We'll start with using the terminal for basic tasks we usually do with GUIs in full desktop environments.

Searching for packages

sudo apt search "Dell XPS 13 9300"

Sorting... Done

Full Text Search... Done

oem-somerville-factory-melisa-meta/unknown,unknown,now 20.04ubuntu12 all [installed]

hardware support for Dell XPS 13 9300

oem-somerville-melisa-meta/unknown,unknown,now 20.04ubuntu12 all [installed]

hardware support for Dell XPS 13 9300

Installing packages

sudo apt install oem-somerville-melisa-meta

Updating package registry and upgrading installed packages

sudo apt update && sudo apt upgrade

Updating package registry, upgrading packages, removing unused, fixing broken installed packages

sudo apt update -y && sudo apt upgrade -y && sudo apt upgrade --fix-broken --fix-missing --auto-remove

Checking system resources

htop

Checking Battery Consumption

sudo powertop

Connecting to WiFi

sudo nmcli device wifi list

IN-USE BSSID SSID MODE CHAN RATE SIGNAL BARS SECURITY

* 40:B8:9A:D7:EC:AF FAKE WIFI-2G Infra 1 195 Mbit/s 100 ▂▄▆█ WPA2

40:B8:9A:D7:EC:B0 FAKE WIFI-5G Infra 149 405 Mbit/s 94 ▂▄▆█ WPA2

FA:8F:CA:95:43:9B Living Room Infra 6 65 Mbit/s 75 ▂▄▆_ --

FA:8F:CA:82:9D:D4 Family Room TV.b Infra 6 65 Mbit/s 57 ▂▄▆_ --

14:ED:BB:1F:44:6D Hi Infra 8 130 Mbit/s 57 ▂▄▆_ WPA2

14:ED:BB:1F:44:76 ATT9eu7M6L Infra 149 540 Mbit/s 44 ▂▄__ WPA2

4C:ED:FB:AD:D8:08 Fluffymarshmellow Infra 1 540 Mbit/s 30 ▂___ WPA2

70:77:81:DE:43:59 WIFIDE4355 Infra 1 195 Mbit/s 24 ▂___ WPA2

70:5A:9E:6C:D4:29 TC8717T23 Infra 6 195 Mbit/s 19 ▂___ WPA2

A8:A7:95:E8:68:82 Wildflower-2G Infra 1 195 Mbit/s 14 ▂___ WPA2

CC:2D:21:57:E0:71 Rudy Infra 6 130 Mbit/s 14 ▂___ WPA1 WPA2

CE:A5:11:3C:E4:C2 Orbi_setup Infra 9 130 Mbit/s 14 ▂___ --

A8:6B:AD:EB:B4:56 Gypsy-2 Infra 6 195 Mbit/s 12 ▂___ WPA1 WPA2

CE:A5:11:3C:EF:8E Orbi_setup Infra 9 130 Mbit/s 12 ▂___ --

Now bring up a connection with the access point we want, and pass the --ask flag to enter a password for authentication.

sudo nmcli c up "FAKE WIFI-2G" --ask

Passwords or encryption keys are required to access the wireless network 'FAKE WIFI-2G'.

Password (802-11-wireless-security.psk): •••••••••••••••••••

Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/9)

Disable transmission devices with rfctl

sudo rfkill list

0: phy0: Wireless LAN

Soft blocked: no

Hard blocked: no

1: hci0: Bluetooth

Soft blocked: yes

Hard blocked: no

Block WiFi

sudo rfkill block wlan

Block Bluetooth

sudo rfkill block bluetooth

Creating a User

sudo adduser username

Granting sudo to a user

sudo usermod -G sudo username

Resetting a user's password

sudo passwd username

Logging out of our user session, where kapper is my username.

sudo pkill -KILL -u kapper

Rebooting

sudo reboot now

Man Pages

When encountering issues with Linux servers, its important to know how to gather specific information from credible resources quickly via tools given to us within our Bash terminal. One of these tools is known as the man pages - this set of documentation is not only well maintained and credible in its content but also readily available to us from any terminal.

Local Storage Location

These pages are usually stored locally within /usr/share/man/ where they can be updated as new packages are released and documentation changed. These local files allow us to reference the man pages offline should we disconnect from the internet, and also within /usr/share/man/ you will see locale named directories - these are simply housing different language man pages should you need to reference them. See the example output below when we check the contents of /usr/share/man

ls /usr/share/man/

cs/ es/ hu/ ja/ man2/ man5/ man8/ pl/ ru/ sv/ zh_TW/

da/ fi/ id/ ko/ man3/ man6/ man9/ pt/ sl/ tr/

de/ fr/ it/ man1/ man4/ man7/ nl/ pt_BR/ sr/ zh_CN/

| Directory | Content Category |

| /usr/share/man/man1 | User programs |

| /usr/share/man/man2 | System calls |

| /usr/share/man/man3 | Library calls |

| /usr/share/man/man4 | Special files |

| /usr/share/man/man5 | File formats |

| /usr/share/man/man6 | Games |

| /usr/share/man/man7 | Miscellaneous |

| /usr/share/man/man8 | System administration |

| /usr/share/man/man9 | vmxnet.9.gz |

Contents of these directories are optional depending on system and distribution

This may seem like besides-the-fact information - but it's good to know where these files are stored and to step through the locations yourself so you know what resources you have available to you. I would urge anyone interested to check out the contents of these locations from your own system, and then view the man pages associated with some of the topics that stand out to you. This should be relatively easy to do, but for completeness, the below is an example of checking a directory and then viewing the man page of topic I found within it. You will not see the man page in the example below, as it is ran within the active terminal.

ls /usr/share/man/man4

cciss.4.gz initrd.4.gz mem.4.gz random.4.gz vcs.4.gz

console_codes.4.gz intro.4.gz mouse.4.gz rtc.4.gz vcsa.4.gz

cpuid.4.gz kmem.4.gz msr.4.gz sd.4.gz veth.4.gz

dsp56k.4.gz lirc.4.gz null.4.gz smartpqi.4.gz wavelan.4.gz

full.4.gz loop-control.4.gz port.4.gz st.4.gz zero.4.gz

fuse.4.gz loop.4.gz ptmx.4.gz tty.4.gz

hd.4.gz lp.4.gz pts.4.gz ttyS.4.gz

hpsa.4.gz md.4.gz ram.4.gz urandom.4.gz

man console_codes

Indexing Pages

When viewing the manual pages, the amount of information can be overwhelming at times and it is easy to miss subtle things that could prove very useful in a situation where information on a topic is otherwise scarce. We should note that there can be sections to a manual entry for any given package, these sections are indexed according to the number of the corresponding category that the referenced package subtopic falls under. Its really useful and easy to understand once you work with it a bit. See the commands below, where we check for all man pages associated with whatis intro, and then look for the correspondence in the Local Man Page Storage table above.

whatis intro

intro (1) - introduction to user commands

intro (2) - introduction to system calls

intro (3) - introduction to library functions

intro (4) - introduction to special files

intro (5) - introduction to file formats and filesystems

intro (6) - introduction to games

intro (7) - introduction to overview and miscellany section

intro (8) - introduction to administration and privileged commands

find /usr/share/man/man* -name intro*

/usr/share/man/man1/intro.1.gz

/usr/share/man/man2/intro.2.gz

/usr/share/man/man3/intro.3.gz

/usr/share/man/man4/intro.4.gz

/usr/share/man/man5/intro.5.gz

/usr/share/man/man6/intro.6.gz

/usr/share/man/man7/intro.7.gz

/usr/share/man/man8/intro.8.gz

So, the intro manual pages proves to be a perfect example since its easy to relate this information to our table above. Below, we ask whatis time

whatis time

time (1) - run programs and summarize system resource usage

time (7) - overview of time and timers

time (3am) - time functions for gawk

Then look into the results by running man <PageID> time where <PageID> corresponds with the page we'd like to view.

user@knoats:~$ man 3am time

We see that the information is organized as we expect, having researched the Local Man Page Storage above. The first section, time (1), is a man page for the time command and how to use it when running user programs. The next section, time (3am), The final section, time (7), is a general overview of time and timers within Linux.

Text Editor

You will need to edit text when working in Linux, and a popular and powerful tool for doing so is vim. Vim can be a tricky program to use at first, but there are resources available to help teach vim to newcomers. There is even a commandline tutor that will walk you through vim from within the default viewport of a terminal using interactive text tutorials. to run this tutorial, simply run vimtutor from any Linux commandline. I would elaborate more on this topic, since it is such an important tool within Linux Server Administration - but there are plenty of tools and resources out there that offer much more information. Instead, I'll link to some good information here. Or, if you don't have immediate access to a terminal, check out a quick google search for some vim interactive tutorials and you're sure to find some games available to teach you within a web browser.

| Plugins / Enhancements | |

| Syntax Checker for Vim | https://github.com/vim-syntastic/syntastic |

| Snippets | https://github.com/SirVer/ultisnips |

| Vim Solarized | https://github.com/altercation/vim-colors-solarized |

| Code Completion | https://github.com/ycm-core/YouCompleteMe |

| Git Plugin | https://github.com/tpope/vim-fugitive |

| Auto Configuration Tool | https://github.com/chxuan/vimplus |

| Community Vim Distribution | https://github.com/SpaceVim/SpaceVim |

| Everything Else | https://github.com/mhinz/vim-galore |

Linux on Chromebooks

Booting Persistent USB

It is possible to boot into a 3.1 USB stick with persistent data saved between sessions. There are plenty of cheap options out there for ultra portable USBs that you'll hardly notice due to their low-profiles. Even better, if you have a chromebook with USB C ports. The main limiter on your system will often be read/write speed, as you are funnelling all of your data through a USB device opposed to internal storage. Be careful to choose the port on your device with the best speed, you will be glad you did later on.

If you boot this way, you won't have to run or use crouton, or even boot into ChromeOS (CrOS). You'll need to enable developer mode, and press CTRL+L when rebooting the chromebook and the warning is displayed for 'OS Detection' being disabled. To boot into CrOS instead, press CTRL+D. If you press nothing, a loud BEEP will happen and it will boot into CrOS.

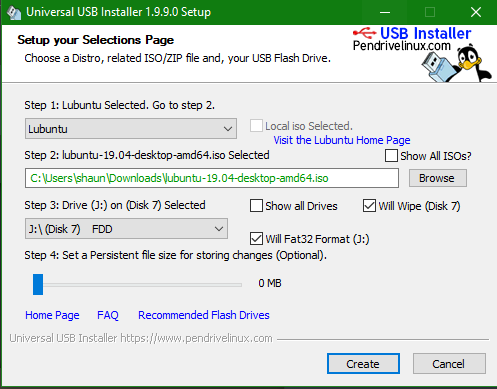

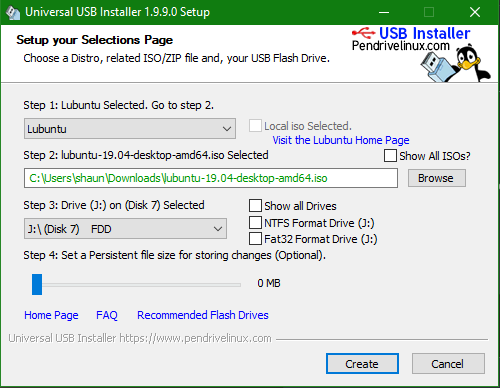

If you have a windows machine handy, check out the links below to see how you can create a persistent USB for booting. This method does not install linux onto the USB, but rather creates a Live USB (Installation media) of your selected distribution. On this Live USB, if created following these directions, there exists a persistent filesystem, which allows you to retain settings and application data between reboots.

Normally, on a Live USB there is no persistence and all data will be lost between reboots, so be careful to follow the steps carefully when creating your USB.

Consider how much you plan to store on your system, for me 30GB storage is plenty to do all my programming and server administration from a Lubuntu installation.

My Toshiba 2 Chromebook is running on a massive 2GB of RAM, paired with a generation 6 Intel Duo ~2GhZ and 16GB internal storage. It ran me $100 in 2015 used off ebay and is still running strong. I run Lubuntu booting into a 3.1 USB, which uses i3wm and the bare minimum for packages installed / running. I have no issues in VS Code, Pycharm, LaTeX editors, and all office applications work fine. The main issue to note is web browsing. Chrome or any derivitive will consume nearly all of your RAM. Firefox does ok, and if you visit about:memory in your address bar it will allow you to 'Minimize Memory Usage' by clicking a button. Midori is my preferred browser when not dealing with personal accounts and just reading documentation.

Enable Developer Mode

Once you have the USB stick made with the linux distro you want to run, you can start your chromebook and open a terminal. For me, this is CTRL+ALT+t. Once in the console window, you'll see a crosh> prompt. Type the commands below and read the output.

Welcome to crosh, the Chrome OS developer shell.

If you got here by mistake, don't panic! Just close this tab and carry on.

Type 'help' for a list of commands.

If you want to customize the look/behavior, you can use the options page.

Load it by using the Ctrl-Shift-P keyboard shortcut.

crosh> shell

chronos@localhost / $

Next, check the available settings by running the crossystem command. This output is useful if you want more information on what values you're setting, and why.

chronos@localhost / $ sudo crossystem

Password:

arch = x86 # [RO/str] Platform architecture

backup_nvram_request = 1 # [RW/int] Backup the nvram somewhere at the next boot. Cleared on success.

battery_cutoff_request = 0 # [RW/int] Cut off battery and shutdown on next boot

block_devmode = 0 # [RW/int] Block all use of developer mode

clear_tpm_owner_done = 0 # [RW/int] Clear TPM owner done

clear_tpm_owner_request = 0 # [RW/int] Clear TPM owner on next boot

cros_debug = 1 # [RO/int] OS should allow debug features

dbg_reset = 0 # [RW/int] Debug reset mode request

debug_build = 0 # [RO/int] OS image built for debug features

dev_boot_altfw = 0 # [RW/int] Enable developer mode alternate bootloader

dev_boot_signed_only = 0 # [RW/int] Enable developer mode boot only from official kernels

dev_boot_usb = 0 # [RW/int] Enable developer mode boot from external disk (USB/SD)

dev_default_boot = disk # [RW/str] Default boot from disk, altfw or usb

dev_enable_udc = 0 # [RW/int] Enable USB Device Controller

devsw_boot = 1 # [RO/int] Developer switch position at boot

devsw_cur = 1 # [RO/int] Developer switch current position

diagnostic_request = 0 # [RW/int] Request diagnostic rom run on next boot

disable_dev_request = 0 # [RW/int] Disable virtual dev-mode on next boot

ecfw_act = RW # [RO/str] Active EC firmware

post_ec_sync_delay = 0 # [RW/int] Short delay after EC software sync (persistent, writable, eve only)

fw_prev_result = unknown # [RO/str] Firmware result of previous boot (vboot2)

fw_prev_tried = A # [RO/str] Firmware tried on previous boot (vboot2)

fw_result = unknown # [RW/str] Firmware result this boot (vboot2)

fw_tried = A # [RO/str] Firmware tried this boot (vboot2)

fw_try_count = 0 # [RW/int] Number of times to try fw_try_next

fw_try_next = A # [RW/str] Firmware to try next (vboot2)

fw_vboot2 = 0 # [RO/int] 1 if firmware was selected by vboot2 or 0 otherwise

fwb_tries = 0 # [RW/int] Try firmware B count

fwid = Google_Swanky.5216.238.150 # [RO/str] Active firmware ID

fwupdate_tries = 0 # [RW/int] Times to try OS firmware update (inside kern_nv)

hwid = SWANKY E5A-E3P-A47 # [RO/str] Hardware ID

inside_vm = 0 # [RO/int] Running in a VM?

kern_nv = 0x0000 # [RO/int] Non-volatile field for kernel use

kernel_max_rollforward = 0x00000000 # [RW/int] Max kernel version to store into TPM

kernkey_vfy = sig # [RO/str] Type of verification done on kernel keyblock

loc_idx = 0 # [RW/int] Localization index for firmware screens

mainfw_act = A # [RO/str] Active main firmware

mainfw_type = developer # [RO/str] Active main firmware type

nvram_cleared = 0 # [RW/int] Have NV settings been lost? Write 0 to clear

display_request = 0 # [RW/int] Should we initialize the display at boot?

phase_enforcement = (error) # [RO/int] Board should have full security settings applied

recovery_reason = 0 # [RO/int] Recovery mode reason for current boot

recovery_request = 0 # [RW/int] Recovery mode request

recovery_subcode = 0 # [RW/int] Recovery reason subcode

recoverysw_boot = 0 # [RO/int] Recovery switch position at boot

recoverysw_cur = (error) # [RO/int] Recovery switch current position

recoverysw_ec_boot = 0 # [RO/int] Recovery switch position at EC boot

ro_fwid = Google_Swanky.5216.238.5 # [RO/str] Read-only firmware ID

tpm_attack = 0 # [RW/int] TPM was interrupted since this flag was cleared

tpm_fwver = 0x00050003 # [RO/int] Firmware version stored in TPM

tpm_kernver = 0x00030001 # [RO/int] Kernel version stored in TPM

tpm_rebooted = 0 # [RO/int] TPM requesting repeated reboot (vboot2)

tried_fwb = 0 # [RO/int] Tried firmware B before A this boot

try_ro_sync = 0 # [RO/int] try read only software sync

vdat_flags = 0x00002c56 # [RO/int] Flags from VbSharedData

wipeout_request = 0 # [RW/int] Firmware requested factory reset (wipeout)

wpsw_cur = 1 # [RO/int] Firmware write protect hardware switch current position

The settings we are interested in is dev_boot_usb and dev_boot_altfw, so run the following commands to enable developer mode -

chronos@localhost / $ sudo crossystem dev_boot_usb=1

chronos@localhost / $ sudo crossystem dev_boot_altfw=1

Now plug in the USB and reboot the chromebook. When you see the white screen warning about thrid party operating systems, press CTRL+ALT+L and you'll see a prompt to select the USB device to boot from. Since you installed your distribution to your USB with persistence, your data will be saved to the USB. You will need to keep the USB plugged in all the time, and it may not be the best - but it works, and it's better then being stuck in CrOS.

NOTE: The old command for this was dev_boot_legacy, but it has since changed. We can see this when trying to set the old variable name, which leads us to setting dev_boot_altfw.

chronos@localhost / $ sudo crossystem dev_boot_legacy=1

Password:

!!!

!!! PLEASE USE 'dev_boot_altfw' INSTEAD OF 'dev_boot_legacy'

!!!

Booting From USB

That's it! Now be sure the USB is plugged in when you boot up your chromebook, you'll see a warning that 'OS Detection' is disabled, press CTRL+L and select your USB. Alternatively, to boot into CrOS instead, press CTRL+D when you see this message. Since we never actually wrote to the laptop's storage media, CrOS is still just fine and you can hop between that and the linux distribution you've installed on your USB. I usually browsed the web during class on CrOS, then switched over to my linux USB if we started to do some programming.

Since you created the USB with persistent data, you won't need to install the distribution, you can just use it as-is right off the USB drive. It's not ideal, you will run much slower than installing on an SSD or HDD, but this method got me through college on a budget when all I had to use for a laptop was a chromebook.

Using Crouton

Crouton allows you to install linux alongside ChromeOS on a chromebook. Click here to grab the latest crouton installer directly, or alternatively visit Crouton's Repository and click the goo link in the description for the same.

To sync your Chromebook's local clipboard with your Linux install, grab the Crouton extension from the Chrome Web Store.

Once you have this file, be sure it is found in your Chromebook's ~/Downloads directory using the file browser and run the commands below to install Linux -

# Install the crouton binary for use within your chromebook's shell

sudo install -Dt /usr/local/bin -m 755 ~/Downloads/crouton

# Passing -e for encryption, we install all the dependencies for X11 and name(-n) it i3

sudo crouton -e -t core,keyboard,audio,cli-extra,gtk-extra,extension,x11,xorg -n i3

# Enter the chroot

sudo enter-chroot -n i3

# Install i3

sudo apt install i3

# Tell Xorg to start i3 automatically

echo "exec i3" > ~/.xinitrc

# Exit the chroot

# Add an alias for starting i3 in crouton using X

sudo echo "alias starti3='sudo enter-chroot -n i3 xinit'" >> /home/chronos/user/.bashrc

Want i3-gaps instead? See the i3-gaps GitHub for instructions, or run the commands below.

sudo apt-get install software-properties-common

If you're unsure which Distro or DE to install, see the below commands for lists of supported versions.

# List supported Linux releases

sudo crouton -r list

# List supported Linux desktop environments

sudo crouton -t list

# Update chroot (you will need this eventually)

sudo crouton -u -n chrootname

Removing / editing chroots is done via the edit-chroot CLI -

# Print help text

sudo edit-chroot

# Remove chroot named i3

sudo edit-chroot -d i3

# Backup chroot

sudo edit-chroot -b chrootname

# Restore chroot from most recent tarball

sudo edit-chroot -r chrootname

# Restore from specific tarball (new machine?)

sudo edit-chroot -f mybackup.tar.gz

https://github.com/dnschneid/crouton https://github.com/pasiegel/i3-gaps-install-ubuntu/blob/master/i3-gaps https://launchpad.net/~simon-monette/+archive/ubuntu/i3-gaps https://stackoverflow.com/questions/53800051/repository-does-not-have-a-release-file-error

XPS 9310

I intalled my own SSD after purchasing a model from dell with a small SSD installed. This was mostly because I wanted to store the SSD from dell as-is in a box so if I had to submit a claim or sell the laptop later I could reinstall it and it would be as it arrived from dell brand new. The install of the SSD was not difficult but did require a plastic prybar tool for removing the back cover of the laptop. Be very careful not to use any hard tools or too much force or you could damage the chasis. I was able to replace the SSD several times without issue and no damage to the chasis.

The heat shield removed, and my new SSD installed. Of course, be sure to put the heat shield back before closing up the laptop.

Dell provides service manuals for all laptops, check Dell's website for instructions. You'll just need to remove the back plate, then remove a heat shield over the SSD, then swap out the M.2 for your own. It's really not too difficult, but the back plate was a challenege the first time around. You'll get a feel for it after that, just be careful and take your time as your top priority should be to not damage the thing. Putting the back plate back on is also a bit strange, just follow the service manual. Start from the hinges and rotate the plate down into place. It should not require a lot of force, so be careful.

You'll need a Torx-5 screwdriver for the chasis, and I used a common Craftsman 6-in-1 screwdriver for the M.2. Don't use too small of a screwdriver on the M.2 screw, or you'll strip it out. Dell service manual has exact sizes for all screw heads.

WiFi worked out of the box on Kubuntu 20.04.

Had issues with Steam download speeds, see Steam section for details.

Fingerprint reader works, haven't configured my display manager to use it for login yet. See fingerprint section for details.

Iris graphics device works out of the box. OpenGL detects graphics interface.

OpenGL 4.6 (Core Profile) Mesa 21.0.3 ( CoreProfile )

OpenGL Vendor: Intel

Rendering Device: Mesa Intel(R) Xe Graphics (TGL GT2)

Some output from lscpu for context -

kapper@xps:~/Code/qtk/build$ lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

Address sizes: 39 bits physical, 48 bits virtual

CPU(s): 8

On-line CPU(s) list: 0-7

Thread(s) per core: 2

Core(s) per socket: 4

Socket(s): 1

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 140

Model name: 11th Gen Intel(R) Core(TM) i7-1195G7 @ 2.90GHz

Stepping: 2

CPU MHz: 2504.062

CPU max MHz: 5000.0000

CPU min MHz: 400.0000

BogoMIPS: 5836.80

Virtualization: VT-x

L1d cache: 192 KiB

L1i cache: 128 KiB

L2 cache: 5 MiB

L3 cache: 12 MiB

NUMA node0 CPU(s): 0-7

Dell XPS Linux Drivers

First, search for Dell's OEM driver package for the XPS 13 9300 oem-somerville-melisa-meta.

sudo apt search "XPS 13 9300"

Sorting... Done

Full Text Search... Done

oem-somerville-factory-melisa-meta/unknown,unknown 20.04ubuntu12 all

hardware support for Dell XPS 13 9300

oem-somerville-melisa-meta/unknown,unknown,now 20.04ubuntu12 all [installed]

hardware support for Dell XPS 13 9300

Now, install both packages, and then update the package registry. We're updating because installing the somerville packages adds additional package repositories to our apt sources -

sudo apt install oem-somerville-melisa-meta oem-somerville-factory-melisa-meta && sudo apt update

Now you have installed factory drivers and updated your apt package registry to include additional drivers you can optionally download. To install fingerprint reader drivers, we will need the packages in these repositories.

Application Shortcuts

This is more of a Linux / Kubuntu thing, but it was a lot of help in setting up the XPS 9310 to use the start menu for launching custom AppImages, commands, and executables stored in /opt/.

ls ~/.local/share/applications

'7 Days to Die.desktop' jetbrains-datagrip.desktop jetbrains-webstorm.desktop

bitwarden.desktop jetbrains-dataspell.desktop 'Medieval Dynasty.desktop'

'Cities Skylines.desktop' jetbrains-goland.desktop mimeinfo.cache

CryoFall.desktop jetbrains-pycharm.desktop 'Oxygen Not Included.desktop'

'Gunfire Reborn.desktop' jetbrains-rider.desktop Rust.desktop

Icarus.desktop jetbrains-rubymine.desktop unity-hub.desktop

jetbrains-clion.desktop jetbrains-toolbox.desktop

Here's an example of running the exeuctable at /opt/bitwarden to start the Bitwarden Linux client. For the Icon, you can just go online and download any .ico or .png file and use a full path to it.

[Desktop Entry]

Comment[en_US]=

Comment=

Exec=/opt/bitwarden

GenericName[en_US]=

GenericName=

Icon=/home/kapper/Documents/Icons/bitwarden_icon.ico

MimeType=

Name[en_US]=Bitwarden

Name=Bitwarden

Path=

StartupNotify=true

Terminal=false

TerminalOptions=

Type=Application

X-DBUS-ServiceName=

X-DBUS-StartupType=

X-KDE-SubstituteUID=false

X-KDE-Username=

Hidden=false

The libreoffice package installs a desktop file that customizes the right-click conext menu. See below for an example.

# sudo apt install libreoffice

# sudoedit /usr/share/applications/libreoffice-startcenter.desktop

[Desktop Entry]

Version=1.0

Terminal=false

NoDisplay=false

Icon=libreoffice-startcenter

Type=Application

Categories=Office;X-Red-Hat-Base;X-SuSE-Core-Office;X-MandrivaLinux-Office-Other;

Exec=libreoffice %U

MimeType=application/vnd.openofficeorg.extension;x-scheme-handler/vnd.libreoffice.cmis;

Name=LibreOffice

GenericName=Office

Comment=The office productivity suite compatible to the open and standardized ODF document for

mat. Supported by The Document Foundation.

StartupNotify=true

X-GIO-NoFuse=true

StartupWMClass=libreoffice-startcenter

X-KDE-Protocols=file,http,ftp,webdav,webdavs

X-AppStream-Ignore=True

NotShowIn=GNOME;

##Define Actions

Actions=Writer;Calc;Impress;Draw;Base;Math;

[Desktop Action Writer]

Name=Writer

Exec=libreoffice --writer

[Desktop Action Calc]

Name=Calc

Exec=libreoffice --calc

[Desktop Action Impress]

Name=Impress

Exec=libreoffice --impress

[Desktop Action Draw]

Name=Draw

Exec=libreoffice --draw

[Desktop Action Base]

Name=Base

Exec=libreoffice --base

[Desktop Action Math]

Name=Math

Exec=libreoffice --math

##End of actions menu

BIOS Upgrade

Only perform these commands when you have access to power, and the laptop is plugged in. If the laptop shuts down unexpectedly, you will have serious issues and will probably need to ship your machine to dell for a fix.

fwupdmgr get-devices

fwupdmgr refresh --force

fwupdmgr get-updates

fwupdmgr update

Then reboot the PC when connected to AC power and the BIOS update will start.

Steam

Bad download speeds on Steam

Download speeds fixed by disabling IPv6. After running the commands below and restarting Steam -

Followed instructions on linuxconfig.org

sudo sysctl -w net.ipv6.conf.all.disable_ipv6=1

sudo sysctl -w net.ipv6.conf.default.disable_ipv6=1

You should restart Steam if it is already running when changing these settings. There will be no notification to tell you to do so, but you could experience connection issues until you do.

You can enable IPv6 later with the opposite of these commands

sudo sysctl -w net.ipv6.conf.all.disable_ipv6=0

sudo sysctl -w net.ipv6.conf.default.disable_ipv6=0

To make this setting persist, you can add the lines above to the /etc/sysctl.conf configuration file. There will be a lot of comments and information in this file when you open it for editing, but just add the lines below and when you reboot the settings will be applied automatically.

#/etc/sysctl.conf

net.ipv6.conf.all.disable_ipv6=1

net.ipv6.conf.default.disable_ipv6=1

To check if we missed any settings, we can use the sysctl CLI -

sudo sysctl -a | grep disable_ipv6

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

net.ipv6.conf.lo.disable_ipv6 = 1

net.ipv6.conf.wlp0s20f3.disable_ipv6 = 1

These additional settings can be added to our configurations if needed.

Editing the /etc/sysctl.conf file did not make these settings persist between reboots. What I ended up doing was just editing the connection settings using the default network manager that comes installed with Kubuntu in a Plasma desktop session. This is simply right-clicking the wifi icon in the start menu and editing the connections in the GUI window, or you can run kcmshell5 kcm_networkmanagement to open the same GUI directly from a console. The added benefit to doing it this way is you don't need to modify any kernel options, so the only time your computer will ignore IPv6 is if you're using this specific connection. Otherwise, IPv6 will be enabled as normal.

Fingerprint

Install the fingerprint reader drivers. To get these, you must have installed oem-somerville package in the first section.

sudo apt install libfprint-2-tod1-goodix

That's it! Now the fingerprint reader will work, but I haven't configured it to be used for any login or lock screen yet on Kubuntu. To enroll a fingerprint for the kapper user, run the command below

fprintd-enroll kapper -f right-index-finger

And to test, run the following command

fprintd-verify kapper -f right-index-finger

Using device /net/reactivated/Fprint/Device/0

Listing enrolled fingers:

- #0: left-index-finger

- #1: right-index-finger

Verify started!

Verifying: right-index-finger

Verify result: verify-match (done)

Battery Life Improvements

To improve battery I installed tlp and configured /etc/tlp.d/01-kapper.conf. These settings will be loaded the next time you reboot, or you can run tlp start to load the settings now without rebooting.

First, to see what the min and max frequency is for our CPU we should run the following command and check the output

sudo tlp-stat -p

--- TLP 1.3.1 --------------------------------------------

+++ Processor

CPU model = 11th Gen Intel(R) Core(TM) i7-1195G7 @ 2.90GHz

/sys/devices/system/cpu/cpu0/cpufreq/scaling_driver = intel_pstate

/sys/devices/system/cpu/cpu0/cpufreq/scaling_governor = powersave

/sys/devices/system/cpu/cpu0/cpufreq/scaling_available_governors = performance powersave

/sys/devices/system/cpu/cpu0/cpufreq/scaling_min_freq = 400000 [kHz]

/sys/devices/system/cpu/cpu0/cpufreq/scaling_max_freq = 5000000 [kHz]

/sys/devices/system/cpu/cpu0/cpufreq/energy_performance_preference = balance_power [HWP.EPP]

/sys/devices/system/cpu/cpu0/cpufreq/energy_performance_available_preferences = default performance balance_performance balance_power power

/sys/devices/system/cpu/cpu1/cpufreq/scaling_driver = intel_pstate

/sys/devices/system/cpu/cpu1/cpufreq/scaling_governor = powersave

/sys/devices/system/cpu/cpu1/cpufreq/scaling_available_governors = performance powersave

/sys/devices/system/cpu/cpu1/cpufreq/scaling_min_freq = 400000 [kHz]

/sys/devices/system/cpu/cpu1/cpufreq/scaling_max_freq = 4800000 [kHz]

/sys/devices/system/cpu/cpu1/cpufreq/energy_performance_preference = balance_power [HWP.EPP]

/sys/devices/system/cpu/cpu1/cpufreq/energy_performance_available_preferences = default performance balance_performance balance_power power

/sys/devices/system/cpu/cpu2/cpufreq/scaling_driver = intel_pstate

/sys/devices/system/cpu/cpu2/cpufreq/scaling_governor = powersave

/sys/devices/system/cpu/cpu2/cpufreq/scaling_available_governors = performance powersave

/sys/devices/system/cpu/cpu2/cpufreq/scaling_min_freq = 400000 [kHz]

/sys/devices/system/cpu/cpu2/cpufreq/scaling_max_freq = 4800000 [kHz]

/sys/devices/system/cpu/cpu2/cpufreq/energy_performance_preference = balance_power [HWP.EPP]

/sys/devices/system/cpu/cpu2/cpufreq/energy_performance_available_preferences = default performance balance_performance balance_power power

/sys/devices/system/cpu/cpu3/cpufreq/scaling_driver = intel_pstate

/sys/devices/system/cpu/cpu3/cpufreq/scaling_governor = powersave

/sys/devices/system/cpu/cpu3/cpufreq/scaling_available_governors = performance powersave

/sys/devices/system/cpu/cpu3/cpufreq/scaling_min_freq = 400000 [kHz]

/sys/devices/system/cpu/cpu3/cpufreq/scaling_max_freq = 5000000 [kHz]

/sys/devices/system/cpu/cpu3/cpufreq/energy_performance_preference = balance_power [HWP.EPP]

/sys/devices/system/cpu/cpu3/cpufreq/energy_performance_available_preferences = default performance balance_performance balance_power power

/sys/devices/system/cpu/cpu4/cpufreq/scaling_driver = intel_pstate

/sys/devices/system/cpu/cpu4/cpufreq/scaling_governor = powersave

/sys/devices/system/cpu/cpu4/cpufreq/scaling_available_governors = performance powersave

/sys/devices/system/cpu/cpu4/cpufreq/scaling_min_freq = 400000 [kHz]

/sys/devices/system/cpu/cpu4/cpufreq/scaling_max_freq = 5000000 [kHz]

/sys/devices/system/cpu/cpu4/cpufreq/energy_performance_preference = balance_power [HWP.EPP]

/sys/devices/system/cpu/cpu4/cpufreq/energy_performance_available_preferences = default performance balance_performance balance_power power

/sys/devices/system/cpu/cpu5/cpufreq/scaling_driver = intel_pstate

/sys/devices/system/cpu/cpu5/cpufreq/scaling_governor = powersave

/sys/devices/system/cpu/cpu5/cpufreq/scaling_available_governors = performance powersave

/sys/devices/system/cpu/cpu5/cpufreq/scaling_min_freq = 400000 [kHz]

/sys/devices/system/cpu/cpu5/cpufreq/scaling_max_freq = 4800000 [kHz]

/sys/devices/system/cpu/cpu5/cpufreq/energy_performance_preference = balance_power [HWP.EPP]

/sys/devices/system/cpu/cpu5/cpufreq/energy_performance_available_preferences = default performance balance_performance balance_power power

/sys/devices/system/cpu/cpu6/cpufreq/scaling_driver = intel_pstate

/sys/devices/system/cpu/cpu6/cpufreq/scaling_governor = powersave

/sys/devices/system/cpu/cpu6/cpufreq/scaling_available_governors = performance powersave

/sys/devices/system/cpu/cpu6/cpufreq/scaling_min_freq = 400000 [kHz]

/sys/devices/system/cpu/cpu6/cpufreq/scaling_max_freq = 4800000 [kHz]

/sys/devices/system/cpu/cpu6/cpufreq/energy_performance_preference = balance_power [HWP.EPP]

/sys/devices/system/cpu/cpu6/cpufreq/energy_performance_available_preferences = default performance balance_performance balance_power power

/sys/devices/system/cpu/cpu7/cpufreq/scaling_driver = intel_pstate

/sys/devices/system/cpu/cpu7/cpufreq/scaling_governor = powersave

/sys/devices/system/cpu/cpu7/cpufreq/scaling_available_governors = performance powersave

/sys/devices/system/cpu/cpu7/cpufreq/scaling_min_freq = 400000 [kHz]

/sys/devices/system/cpu/cpu7/cpufreq/scaling_max_freq = 5000000 [kHz]

/sys/devices/system/cpu/cpu7/cpufreq/energy_performance_preference = balance_power [HWP.EPP]

/sys/devices/system/cpu/cpu7/cpufreq/energy_performance_available_preferences = default performance balance_performance balance_power power

/sys/devices/system/cpu/intel_pstate/min_perf_pct = 8 [%]

/sys/devices/system/cpu/intel_pstate/max_perf_pct = 100 [%]

/sys/devices/system/cpu/intel_pstate/no_turbo = 0

/sys/devices/system/cpu/intel_pstate/turbo_pct = 45 [%]

/sys/devices/system/cpu/intel_pstate/num_pstates = 47

/sys/module/workqueue/parameters/power_efficient = Y

/proc/sys/kernel/nmi_watchdog = 0

We can see our min and max CPU frequency is 400000 and 5000000

Next, we should check the frequency for our GPU. For me, this is an integrated intel CPU. To check the min and maximum frequencies I ran the following command

sudo tlp-stat -g

--- TLP 1.3.1 --------------------------------------------

+++ Intel Graphics

/sys/module/i915/parameters/enable_dc = -1 (use per-chip default)

/sys/module/i915/parameters/enable_fbc = -1 (use per-chip default)

/sys/module/i915/parameters/enable_psr = -1 (use per-chip default)

/sys/module/i915/parameters/modeset = -1 (use per-chip default)

/sys/class/drm/card0/gt_min_freq_mhz = 100 [MHz]

/sys/class/drm/card0/gt_max_freq_mhz = 1400 [MHz]

/sys/class/drm/card0/gt_boost_freq_mhz = 1400 [MHz]

The minimum and maximum frequency for my GPU is 100 and 1400

Using only this information and my own personal preferences, this is my configuration at /etc/01-kapepr.conf. To create this I just looked through the settings in /etc/tlp.conf and copied over the interesting ones.

TLP_ENABLE=1

TLP_DEFAULT_MODE=AC

# By checking output of `tlp-stat -p`

# + My CPU min freq is 400000; max is 5000000

CPU_SCALING_MIN_FREQ_ON_AC=400000

CPU_SCALING_MAX_FREQ_ON_AC=5000000

CPU_SCALING_MIN_FREQ_ON_BAT=400000

CPU_SCALING_MAX_FREQ_ON_BAT=1000000

# By checking output of `tlp-stat -g`

# + My Intel GPU min freq is 100; Max is 1400; Boost is 1400

INTEL_GPU_MIN_FREQ_ON_AC=100

INTEL_GPU_MAX_FREQ_ON_AC=1400

INTEL_GPU_BOOST_FREQ_ON_AC=1400

INTEL_GPU_MIN_FREQ_ON_BAT=100

INTEL_GPU_MAX_FREQ_ON_BAT=1000

INTEL_GPU_BOOST_FREQ_ON_BAT=1000

# Default: off (AC), on (BAT)

WIFI_PWR_ON_AC=off

WIFI_PWR_ON_BAT=on

# Set to 0 to disable, 1 to enable USB autosuspend feature.

# Default: 1

USB_AUTOSUSPEND=1

# Exclude listed devices from USB autosuspend (separate with spaces).

# Use lsusb to get the ids.

# Note: input devices (usbhid) are excluded automatically

# Default: <none>

#USB_BLACKLIST="1111:2222 3333:4444"

# Bluetooth devices are excluded from USB autosuspend:

# 0=do not exclude, 1=exclude.

# Default: 0

USB_BLACKLIST_BTUSB=0

# Radio devices to disable on startup: bluetooth, wifi, wwan.

# Separate multiple devices with spaces.

# Default: <none>

DEVICES_TO_DISABLE_ON_STARTUP="bluetooth"

# Radio devices to disable on battery: bluetooth, wifi, wwan.

# Default: <none>

#DEVICES_TO_DISABLE_ON_BAT="bluetooth"

# Radio devices to disable on battery when not in use (not connected):

# bluetooth, wifi, wwan.

# Default: <none>

DEVICES_TO_DISABLE_ON_BAT_NOT_IN_USE="bluetooth"

Do't forget to run tlp start or reboot to apply the changes.

To see that the settings have been applied, you can check on your CPU frequencies and battery usage with and without tlp. Run sudo powertop and check the different tabs. Here's my CPU frequencies without tlp enabled -

And here it is with tlp enabled

And i'm seeing an hour or two extra on battery life in general. Maybe more in extreme cases where I'm doing really light browsing and not using bluetooth, low backlight, now keyboard backlight, etc.

Docker Power Usage

Surprisingly, I found through monitoring battery usage with powertop that since installing docker I've seen an increase of around ~3W consistent power draw when using my laptop.

This is an insane amount of power draw, - actually it's over 30% of my total power consumtion. To disable the docker network interface causing this power drain, run the following command

sudo ifconfig docker0 down

And when you're actually doing docker things, you can reenable it with a similiar command

sudo ifconfig docker0 up

I just can't reason with leaving this enabled all the time. The docker0 network interface consumes more battery than my display at times, and I can't help but feel that's an unreasonable amount of power draw for something I'm only using some of the time.

Bash

Bash Profiles

The following block contains a list of files related to bash, and their location / use.

/bin/bash

The bash executable

/etc/bash.bashrc

The system-wide bashrc for interactive bash shells, invoked on any login to an interactive shell.

/etc/skel/.bashrc

Used as a template for new users when initializing a basic .bashrc in their home directory.

/etc/profile

The systemwide initialization file, executed for login shells

/etc/bash.bash_logout

The systemwide login shell cleanup file, executed when a login shell exits

~/.bash_profile

The personal initialization file, executed for login shells

~/.bashrc

The individual per-interactive-shell startup file

~/.bash_aliases

An optional file sourced by .bashrc by default

~/.bash_logout

The individual login shell cleanup file, executed when a login shell exits

For more help, you can refer to the references and examples in /usr/share/doc/bash/, or if you don't have these files you can download them on Ubuntu with sudo apt install bash-doc and see the /usr/share/doc/bash-doc/ directory. To help you explore these files, consider installing the terminal file browser ranger with sudo apt install ranger. If it is a .html file, open it in a web browser to browse the file easily.

Creating Shells

From within man bash, we can find the following explanation for the creation of an interactive bash shell -

When bash is invoked as an interactive login shell, or as a non-interactive shell with the --login option, it first reads and executes commands from the file /etc/profile, if that file exists. After reading that file, it looks for ~/.bash_profile, ~/.bash_login, and ~/.profile, in that order, and reads and executes commands from the first one that exists and is readable. The --noprofile option may be used when the shell is started to inhibit this behavior.

What this means is when a bash session is started that allows the user to interact with it by reading and writing, it will read from the /etc/profile. After reading from this file, it will look for one of three files within the user's home directory and read the first one that exists. This means that we can use the /etc/profile file to set system-wide settings for all interactive terminal sessions. For me, this is useful to set the editor for all users to default to Vim with the exports to EDITOR and VISUAL below - if they want to override it, they can.

System Profile

First, the default /etc/profile/ can be seen in the code block below. I wrote some comments in the file to explain what the script is doing.

# /etc/profile: system-wide .profile file for the Bourne shell (sh(1))

# and Bourne compatible shells (bash(1), ksh(1), ash(1), ...).

# This line sets the system-wide default text editor to vim

export EDITOR='/usr/bin/vim'

export VISUAL='/usr/bin/vim'

if [ "${PS1-}" ]; then

if [ "${BASH-}" ] && [ "$BASH" != "/bin/sh" ]; then

# The file bash.bashrc already sets the default PS1.

# PS1='\h:\w\$ '

if [ -f /etc/bash.bashrc ]; then

. /etc/bash.bashrc

fi

else

if [ "`id -u`" -eq 0 ]; then

PS1='# '

# This block allows for configuring any user whos id == 0

# In other words, these settings will be applied to the root user only.

else

PS1='$ '

# These settings will apply in all other cases, system-wide

# In other words, upon successful login to an authroized user who is not root, this block will be executed

fi

fi

fi

# If the directory /etc/profile.d/ exists, source every file within it

# + See this directory for system defaults for interactive login shells for various programs

if [ -d /etc/profile.d ]; then

for i in /etc/profile.d/*.sh; do

if [ -r $i ]; then

. $i

fi

done

unset i

fi

The above /etc/profile configuration will set the default editor to vim, system-wide, regardless of which user is logged in. This includes the root user. Users can choose to override this in their own ~/.bashrc, but users won't be prompted to select their default editor since the system will now use Vim by default.

If you want to specify which user, or if you want to handle the root user independent from the rest of the system, take a closer look at the comments I've added in the above configuration file and modify as needed. You could specify a user ID here to source additional files, or you could just handle that sort of thing in that user's ~/.bashrc.

If you are trying to use the default text editor for any command ran with sudo, be sure that you pass either the -E or --preserve-env argument. So, if we wanted to preserve our environment settings for the default text editor Vim when running vigr or visudo we would simply run sudo -E vigr or sudo --preserve-env visudo to ensure these settings are referred to when using sudo

User Profiles

After reading from /etc/profile/, bash looks for one of three files - ~/.bash_profile, ~/.bash_login, and ~/.profile, in that order. The first file that exists is sourced and bash stops looking. For evidence of this, notice the comments in the first few lines of the ~/.profile file descibed by man bash that points out the file's order of execution. Just after, within the first condition of the file, it becomes obvious where ~/.bashrc comes into play and things start to come to an end -

# ~/.profile: executed by the command interpreter for login shells.

# This file is not read by bash(1), if ~/.bash_profile or ~/.bash_login

# exists.

# see /usr/share/doc/bash/examples/startup-files for examples.

# the files are located in the bash-doc package.

# the default umask is set in /etc/profile; for setting the umask

# for ssh logins, install and configure the libpam-umask package.

#umask 022

# if running bash

if [ -n "$BASH_VERSION" ]; then

# Include ~/.bashrc if it exists

if [ -f "$HOME/.bashrc" ]; then

. "$HOME/.bashrc"

fi

fi

# set PATH so it includes user's private bin if it exists

# + Any executables added to this directory will exist on your PATH

if [ -d "$HOME/bin" ] ; then

PATH="$HOME/bin:$PATH"

fi

# set PATH so it includes user's private bin if it exists (Alternate path)

# + Any executables added to this directory will exist on your PATH

if [ -d "$HOME/.local/bin" ] ; then

PATH="$HOME/.local/bin:$PATH"

fi

All my ~/.profile is doing above is sourcing the ~/.bashrc file if it exists, and then adding some default directories to my user's PATH, if they exist. On different systems this can be handled differently. For example, below is an example of the same thing happening in a ~/.bash_profile -

if [ -f ~/.bashrc ]; then . ~/.bashrc; fi

So we know now that when you want to edit settings for certain users who invoke their own interactive shells, the ~/.bashrc file should be created or reconfigured. The rest of the page below will show some basic syntax for editing the ~/.bashrc file, along with some examples.

Interactive Shells

An interactive shell is one that can read and write to the user's terminal. This means that bash can take input from the user and provide some input back to them as a result. As described on the GNU Bash documentation, these shells often define the PS1 variable which we will cover later. This variable describes how the user's bash prompt should appear within their session, and can often be fun or useful to customize. To start an interactive shell, you often use a login shell since you need to first authenticate with the system. On some more feature-rich systems though, you can start an interactive shell as a non-login shell, for example if you run a terminal application and you are already logged in - you are starting a new interactive shell without logging in, so you are in a non-login interactve shell.

Non-interactive Shells

An example of a non-interactive shell is one which does not take input and often does not provide output. An example of these could be running a script, when we invoke the script we start a new shell that runs that script - this shell is non-interactive. These shells do require login, since they are invoked by users who are already logged in, so they are also considered to be a non-login shell.

Skeleton Configurations

As stated in the first section, the /etc/skel/ directory contains files that are distributed to each new user created on our system. This is useful to know, since we can directly modify these files to provide different default configurations provided when new users are created. This can be a nice way to ensure that all users start with the same aliases, or are shown a similar prompt. We can even specify other defaults here, like providing a default .vimrc to distribute to new users, or setting certain shell options.

Customizing Bashrc

Once logged in as your bash user, you can adjust your personal bash settings by modifying ~/.bashrc, or /home/username/.bashrc. If the file doesn't exist, you can just create it and follow along with no additional setup required. If this file exists, it can at first be a lot to look at, but some of the more important lines to consider are seen below -

Bash prompt

# This controls how your prompt looks within terminals logged in as your user

if [ "$color_prompt" = yes ]; then

PS1='${debian_chroot:+($debian_chroot)}\[\033[01;32m\]\u@\h\[\033[00m\]:\[\033[01;34m\]\w\[\033[00m\]\$ '

else

PS1='${debian_chroot:+($debian_chroot)}\u@\h:\w\$ '

fi

Alias / export customizations

# some more ls aliases

alias ll='ls -alF'

alias la='ls -A'

alias l='ls -CF'

Additional files to source

# You may want to put all your additions into a separate file like

# ~/.bash_aliases, instead of adding them here directly.

# See /usr/share/doc/bash-doc/examples in the bash-doc package.

if [ -f ~/.bash_aliases ]; then

. ~/.bash_aliases

fi1

Auto-completion

# enable programmable completion features (you don't need to enable

# this for each user, if it's already enabled in /etc/bash.bashrc and /etc/profile

if ! shopt -oq posix; then

if [ -f /usr/share/bash-completion/bash_completion ]; then

. /usr/share/bash-completion/bash_completion

elif [ -f /etc/bash_completion ]; then

. /etc/bash_completion

fi

fi

Environment Variables

PS1: Environment variable which contains the value of the default prompt. It changes the shell command prompt appearance.

kapper@kubuntu-vbox $ export PS1='[\u@\h \W]\$'

[kapper@kubuntu-vbox ~]$

PS2: Environment variable which contains the value the prompt used for a command continuation interpretation. You see it when you write a long command in many lines. In most cases, this is set to > , and is seen below after using the \ character to break the command into several lines -

[kapper@kubuntu-vbox ~]$ export PS2='--> '

[kapper@kubuntu-vbox ~]$ cp /some/really/long/system/path/fileOne \

--> fileTwo

PS3: Environment variable which contains the value of the prompt for the select operator inside the shell script.

PS4: Environment variable which contains the value of the prompt used to show script lines during the execution of a bash script in debug mode. This could be used to show the line number at the current point of execution -

# $0 is the current file being executed, $LINENO is the current line number

[kapper@kubuntu-vbox ~]$ export PS4='$0:$LINENO'

[kapper@kubuntu-vbox ~]$ bash -x fix-vbox.sh

fix-vbox.sh:5grep 'VBoxClient --draganddrop'

fix-vbox.sh:6awk '{print $2}'

fix-vbox.sh:7xargs kill

fix-vbox.sh:8ps aux www

PROMPT_COMMAND: Environment variable which contains command(s) to run before printing the prompt within the terminal.

[kapper@kubuntu-vbox ~]$export PROMPT_COMMAND='echo -n "$(date): " && pwd'

Sun 12 Sep 2021 05:00:55 PM EDT: /home/kapper

[kapper@kubuntu-vbox ~]$ls

Desktop Music Pictures Videos

Code Public Documents Downloads

Sun 12 Sep 2021 05:01:02 PM EDT: /home/kapper

Bash Aliases

Create a list of aliases within your home directory inside a file named .bash_aliases, and add any custom aliases or PATH modifications there. The file may not exist, and if it doesn't just create one and start listing aliases or settings. This way when you want to adjust something like your PATH or aliases, you don't have to dig through all the contents of .bashrc. For example, some of the contents of my ~/.bash_aliases is seen below. This file will automatically be sourced by bash when logging into our user, in addition to the contents of the ~/.bashrc.

# Alias / export customizations

alias gitkapp='git config --global user.name "Shaun Reed" && git config --global user.email "shaunrd0@gmail.com"'

# colored GCC warnings and errors

export GCC_COLORS='error=01;31:warning=01;35:note=01;36:caret=01;32:locus=01:quote=01'

# some more ls aliases

alias ll='ls -alF'

alias la='ls -A'

alias l='ls -CF'

The gitkapp alias above is a quick way of telling git who I am when logged in as a new user. You could imagine having more versions of this alias to switch to different git users quickly. Alternatively, you could use the git config --local ... command within the alias to automate configuring a specific repository for a certain user in a single command without modifying your global git user. Aliases even automatically show up using auto completion -

[user@host ~]$git

git git-shell git-upload-pack

git-receive-pack git-upload-archive gitkapp

[user@host ~]$gitkapp

Identifying Unicode Symbols for use in .bashrc

Character search engine

If you dont have access to a terminal, you can search up a symbol to get UTF8, see the below character and the corresponding UTF8 format as an example. ഽ = 0xE0 0xB4 0xBD

To output this symbol in a bash terminal using this hex value, we can test with echo -

echo -e '\xe0\xb4\xbd'

Note that these hexidecimal values are not case sensitive.

Hexdump unicode symbol

Most linux systems already have hexdump installed, so we could also run echo ✓ | hexdump -C to see the following output. Note that the -C option displays character data in hexidecimal ascii format -

[kapper@kubuntu-vbox ~]$echo ✓ | hexdump -C

00000000 e2 9c 93 0a |....|

00000004

From this output, we can see that the UTF8 hexidecimal format of our symbol is e2 9c 93. Using this information, we can test the character with the echo statement below.

echo -e '\xe2\x9c\x93'

This will out our ✓ symbol, colored green. \001\033[1;32m\002 Begins the green color, and \001\033[0m\002 returns to

echo -e '\001\033[1;32m\002\xe2\x9c\x93\001\033[0m\002'

Unicode.vim

Worth mentioning that if you are using vim, an easy to use plugin that is useful for identifying characters is unicode.vim. See my notes on Unicode vim plugin for more information, or check out the official Unicode vim repository.

In any case, when using special characters and symbols in an assignment to PS1, you need to tell bash to interpret these values with a $ before opening your single-quotes, as in export PS1=$'\xe2\x9c\x93'

Bash Prompt

Your bash prompt is seen before you type a command -

user@host:~/$

The prompt above, user@host:~/$, is defined by the PS1 variable within your ~/.bashrc where \u is your username user, and \h is the hostname host in the prompt above. The \w in the prompt is what places our current directory ~/ before the final $ within the prompt -

# Bash prompt settings

if [ "$color_prompt" = yes ]; then

PS1='${debian_chroot:+($debian_chroot)}\[\033[01;32m\]\u@\h\[\033[00m\]:\[\033[01;34m\]\w\[\033[00m\]\$ '

else

PS1='${debian_chroot:+($debian_chroot)}\u@\h:\w\$ '

fi

By default within your .bashrc there's two settings to configure, the first block includes color, the second does not. When first learning about the prompt and all the available options like \u, \h, and \w, it might be easier to look at the second prompt without the escape sequences for adding color. As we will see later, care must be taken to properly escape non-printing characters within your prompt, specifically color codes. That is the meaning of character sequences like \[\033[01;32m\] or \001\033[01;32m\002. Later we will cover the meaning of these symbols and how to properly organize them within your prompt.

You can change this prompt using the variety of settings below. Test your prompts with export PS1='<YOUR_PROMPT_HERE>' and after you've got a good export working, paste it into the ~/.bashrc to apply your changes each time you login. If you do not put the PS1 assignment within your ~/.bashrc and log out of your terminal with an export applied, when you login it will be overwritten by the code above.

The ${debian_chroot:+($debian_chroot)} portion of PS1 above only impacts our shell when we are using a chroot, which is a way of chaging the root directory of the system into a smaller virtualized environment that exists within the system. So if we are using a chroot, the we will see the following prompt -

(chroot-name)user@host:~/$

You can remove this ${debian_chroot:+($debian_chroot)} portion or leave it, entirely up to you.

Prompt Options

When setting your bash prompt, we have the following options available to use. Options are useful for getting information from the current bash session dynamically. For example, \u can be used to place the current username in the prompt, and \h will print the hostname. So the prompt export PS1='\u@\h: will make our prompt username@hostname:

\a The ASCII bell character (you can also type \007)

\d Date in “Sat Sep 04″ format

\e ASCII escape character (you can also type \033 or \x1B)

\h First part of hostname (such as “mybox”)

\H Full hostname (such as “mybox.mydomain.com”)

\j The number of processes you’ve suspended in this shell by hitting ^Z

\l The name of the shell’s terminal device (such as “ttyp4″)

\n Newline

\r Carriage return

\s The name of the shell executable (such as “bash”)

\t Time in 24-hour format (such as “23:59:59″)

\T Time in 12-hour format (such as “11:59:59″)

\@ Time in 12-hour format with am/pm

\u Your username

\v Version of bash (such as 2.04)

\V Bash version, including patchlevel

\w Current working directory (such as “/home/kapper”)

\W The “basename” of the current working directory (such as “kapper”)

\! Current command’s position in the history buffer

\# Command number (this will count up at each prompt, as long as you type something)

\$ If you are not root, inserts a “$”; if you are root, you get a “#”

\xxx Inserts an ASCII character based on three-digit number xxx (replace unused digits with zeros, such as “\007″)

\\ A backslash

\[ This sequence should appear before a sequence of characters that don’t move the cursor (like color escape sequences). This allows bash to calculate word wrapping correctly.

\] Same as \002, This sequence should appear after a sequence of non-printing characters.

\001 can be used directly in place of \[ and is recommended as a more portable option

\002 can be used directly in place of \] and is recommended as a more portable option

Background color codes

This section will cover using escape sequences to change the background color used within your bash prompt. This will have the effect of 'highlighting' the text in a certain color.

The following sequences can be used to set attributes that impact the background color of text print within a bash terminal. Notice that each color has a corresponding light color by changing the leading 4 to a 10. For example, in the color sequence [42m and [102m for green and light green background colors, respectively -

| Default color | \001\033[0;49m\002 | ||

| Black | \001\033[0;40m\002 | White | \001\033[0;107m\002 |

| Light Gray | \001\033[0;47m\002 | Dark Gray | \001\033[0;100m\002 |

| Red | \001\033[0;41m\002 | Light Red | \001\033[0;101m\002 |

| Green | \001\033[0;42m\002 | Light Green | \001\033[0;102m\002 |

| Yellow | \001\033[0;43m\002 | Light Yellow | \001\033[0;103m\002 |

| Blue | \001\033[0;44m\002 | Light Blue | \001\033[0;104m\002 |

| Magenta | \001\033[0;45m\002 | Light Magenta | \001\033[0;105m\002 |

| Cyan | \001\033[0;46m\002 | Light Cyan | \001\033[0;106m\002 |

Foreground color codes

This section will cover using escape sequences to change the font color used within your bash prompt

Using the appropriate bash syntax and the codes below, the \001\033[32m\002 escape code will colorize everything green after until output is reset with \001\033[0m\002. Technically, the color code is only the [32m portion, but it needs to be enclosed in \001\033 and \002. \001\033 is the more portable option for \[\e, and \002 is the more portable option for \].

So \001\003[32m\002 is both technically equivalent to and more portable than \[\e[32m\]

Also, the next section covers attributes, which make up the 0 in \[\e[0;32m\]. So any attribute can be applied to any color by changing this leading value, or the 0; can be removed entirely if normal text is used, as in \[\e[32m\].

The following sequences can be used to set attributes that impact the color of text in a bash terminal. Notice that each color has a corresponding light color by changing the leading 3 to a 9. For example, in the color sequence [32m and [92m for green and light green, respectively -

| Default color | \001\033[0;39m\002 | ||

| Black | \001\033[0;30m\002 | White | \001\033[0;97m\002 |

| Light Gray | \001\033[0;37m\002 | Dark Gray | \001\033[0;90m\002 |

| Red | \001\033[0;31m\002 | Light Red | \001\033[0;91m\002 |

| Green | \001\033[0;32m\002 | Light Green | \001\033[0;92m\002 |

| Yellow | \001\033[0;33m\002 | Light Yellow | \001\033[0;93m\002 |

| Blue | \001\033[0;34m\002 | Light Blue | \001\033[0;94m\002 |

| Magenta | \001\033[0;35m\002 | Light Magenta | \001\033[0;95m\002 |

| Cyan | \001\033[0;36m\002 | Light Cyan | \001\033[0;96m\002 |

Reset attributes

The following sequences can be used to reset attributes that impact the appearance of text in a bash terminal, returning them to normal after the attribute was previously set. Note that the reset is technically only [0m but these also need to be wrapped in \001\033 and \002 -

| Reset all attributes | \001\033[0m\002 |

| Reset bold and bright | \001\033[21m\002 |

| Reset dim | \001\033[22m\002 |

| Reset underline | \001\033[24m\002 |

| Reset blink | \001\033[25m\002 |

| Reset reverse | \001\033[27m\002 |

| Reset hidden | \001\033[28m\002 |

Set attributes

Any attribute can be applied to any color by changing the leading 0;, or the attribute value can be removed entirely and the current attribute settings are used, as in \[\e[32m\].

The following sequences can be used to set attributes that impact the appearance of text in a bash terminal. Note that the set is technically only [1m but these also need to be wrapped in \001\033 and \002 -

| Set bold and bright | \001\033[1m\002 |

| Set dim | \001\033[2m\002 |

| Set underline | \001\033[4m\002 |

| Set blink | \001\033[5m\002 |

| Set reverse | \001\033[7m\002 |

| Set hidden | \001\033[8m\002 |

Prompt Examples

Any of the below exports can be pasted directly into the terminal to be tested. Once the terminal is closed, these settings will be lost, so no worries about getting back to default. This is a good way to test what would happen if you changed the PS1 within your ~/.bashrc, without actually doing so. If you mess up too bad, just close your terminal and open a new one. If you are logged in via ssh, you'll have to either source ~/.bashrc or log out and back into the server.

Note that when using special characters and symbols, you need to tell bash to interpret these values with a $ before opening your single-quotes, as in export PS1=$'\xe2\x9c\x93'

Note that we do not need to escape hexidecimal characters that will be interpreted. See the below for examples

# Ok

echo -e '\001\033[1;32m\002\xde\x90\x0a\001\033[0m\002'

# Wrong, no need to wrap symbol hex value with `\001` and `\002`

echo -e '\001\033[1;32m\002\001\xde\x90\x0a\002\001\033[0m\002'

# Wrong, hexidecimal symbol is wrapped within `\001` and `\002`

echo -e '\001\033[1;32m\xde\x90\x0a\033[0m\002'

When writing custom prompts, this can become a lot to take in all at once. The following prompt doesn't even use color codes yet, and already it is quite the line -

export PS1=$'\xe2\x94\x8c\xe2\x94\x80\xe2\x94\x80\u@\h\xe2\x94\x80[\W]\n\xe2\x94\x94\xe2\x94\x80\xe2\x95\xbc\$'

What I like to do is split the prompt between several append statements to PS1 within my .bashrc. An example of this prompt split across multiple lines shows it is much more readable and easier to adjust -

# Printing ┌──

PS1=''

PS1+=$'\xe2\x94\x8c'

PS1+=$'\xe2\x94\x80'

PS1+=$'\xe2\x94\x80'

# Printing kapper@kubuntu-vbox─[~]

PS1+='\u@\h'

PS1+=$'\xe2\x94\x80'

PS1+='[\W]'

# Move to next line

PS1+=$'\n'

# Printing └──╼$

PS1+=$'\xe2\x94\x94'

PS1+=$'\xe2\x94\x80'

PS1+=$'\xe2\x95\xbc'

PS1+='\$'

Alternatively, for practice or playing around, we can create a new file called .practice_prompt with the following contents. Then, we can just save the file and run source ~/.practice_prompt from a different terminal to enable the custom prompt and see the changes -

# Printing ┌──

export PS1=''

export PS1+=$'\xe2\x94\x8c'

export PS1+=$'\xe2\x94\x80'

export PS1+=$'\xe2\x94\x80'

# Printing kapper@kubuntu-vbox─[~]

export PS1+='\u@\h'

export PS1+=$'\xe2\x94\x80'

export PS1+='[\W]'

# Move to next line

export PS1+=$'\n'

# Printing └──╼$

export PS1+=$'\xe2\x94\x94'

export PS1+=$'\xe2\x94\x80'

export PS1+=$'\xe2\x95\xbc'

export PS1+='\$'

Splitting your PS1 assignment up not only makes it easier to read, but it suddenly becomes easy to comment out specific sections of the prompt when debugging issues with character spacing or adjusting the final appearance.

Simple Prompt

We can create a bare-minimum and simple export like the below, before adding any color

# Example of what the prompt will look like

[kapper@kubuntu-vbox ~]$

# Export to use this prompt

export PS1='[\u@\h \W]\$'

Colorized Prompt

Adding color to the prompt makes things look a bit more complicated, but if we stick to the rules outlined in the sections above we shouldn't have too much of an issue. Remember, if the prompt gets too long feel free to split it up between multiple appending statements within a file, then source that file. An example of this is shown in the earlier sections.

# Example of what the prompt will look like

[kapper@kubuntu-vbox ~]$

# Export to use this prompt

export PS1='\001\033[1;32m\002[\u@\h\001\033[0m\002 \W\001\033[1;32m\002]\$\001\033[0m\002'

Symbols in Prompt

Let's take the colors out for now, and use some symbols to create a more interesting prompt. This prompt is based on the default prompt from the Parrot linux distribution. This prompt will use special symbols, so to begin we use echo ┌ └ ─ ╼ | hexdump -C to get the below output.

[kapper@kubuntu-vbox ~]$echo ┌ └ ─ ╼ | hexdump -C

00000000 e2 94 8c 20 e2 94 94 20 e2 94 80 20 e2 95 bc 0a |... ... ... ....|

00000010

[kapper@kubuntu-vbox ~]$

Notice we passed three symbols with spaces between them. If we run the command ascii to see the ascii table, we can see that the value of the hexidecimal column for the space character is 20. This is seen in the above output and helps to separate the hexidecimal values of our symbols so we can easily see where one begins and ends. We see that ┌ is e2 94 8c followed by a space 20, then └ which is e2 94 94, and another space value of 20. Next, the ─ symbol is e2 94 80, followed by one more 20 and the final ╼ symbol's hex value of e2 95 bc. We will need to place the hexidecimal values of our special characters in the position we want the symbol to appear within our PS1 export.

Below, we use this information to correctly use symbols in our bash prompt. Note that while pasting the raw symbol will appear to work, it will cause bugs in your prompt. The method below requires more effort, but it will not cause character spacing issues within your prompt.

# Example of what the prompt will look like

┌──kapper@kubuntu-vbox─[~]

└──╼$

# Export to use this prompt

export PS1=$'\xe2\x94\x8c\xe2\x94\x80\xe2\x94\x80\u@\h\xe2\x94\x80[\W]\n\xe2\x94\x94\xe2\x94\x80\xe2\x95\xbc\$'

Symbols and colors in Prompt

Here's everthing together in one prompt.

# Example of what the prompt will look like

# NOTE: Color is lost here, but there will be color within your terminal

┌──kapper@kubuntu-vbox─[~]

└──╼$

# Export to use this prompt

export PS1=$'\001\033[1;31m\002\xe2\x94\x8c\xe2\x94\x80\xe2\x94\x80\001\033[1;32m\002\u@\h\001\033[1;31m\002\xe2\x94\x80[\001\033[0m\002\W\001\033[1;31m\002]\n\xe2\x94\x94\xe2\x94\x80\xe2\x95\xbc\001\033[1;32m\002\$\001\033[0;39m\002'

Examples

Read the manual page for bash!

If needed, check out my not-so-brief Introduction to Manual Pages to learn how to reference these manual pages more efficiently.

I would also recommend the book Bash Pocket Reference by Arnold Robbins, it is a pretty dense read but worth looking over. There are a lot of good examples, and it has actually been a pretty useful reference for me to keep close by. It isn't a book, but rather a collection of examples and concepts that are common in bash scripting.

Redirecting Output

Knowing how to control your output streams in bash can help to make you much more effective at writing commands. For example, say you want to run clion on the CWD and fork the process to the background.

clion . &

[1] 178345

2021-12-18 16:13:28,384 [ 3869] WARN - l.NotificationGroupManagerImpl - Notification group CodeWithMe is already registered (group=com.intellij.notification.NotificationGroup@68d6e24d). Plugin descriptor: PluginDescriptor(name=Code With Me, id=com.jetbrains.codeWithMe, descriptorPath=plugin.xml, path=~/.local/share/JetBrains/Toolbox/apps/CLion/ch-0/213.5744.254/plugins/cwm-plugin, version=213.5744.254, package=null, isBundled=true)

2021-12-18 16:13:29,647 [ 5132] WARN - pl.local.NativeFileWatcherImpl - Watcher terminated with exit code 130

Woah! We've inhertied the output from the process we forked to the background, and we've also lost immdeiate control of the process so CTRL+C won't interrupt the program and stop the output as it normally would. To fix this, run fg to bring the process back to the foreground, and then try pressing CTRL+C again. The process will terminate and the output will stop.

If we want to fork this process to the background and redirect all of it's output so we don't need to see it, we can run the following command. Note that in this case, we are redirecting to /dev/null to throw away the output. If we wanted to, we could instead redirect to a file and log the output.

clion . 2>/dev/null 1>&2 &

Or, a shorter version, which is shorthand for redirecting all output.

clion . &>/dev/null &

If we want to redirect only standard output

clion 1>/dev/null &

And if we want to redirect only standard error

clion. 2>/dev/null

Creating Scripts

Bash scripting is much like interacting with the bash terminal - the similarity can be easily seen in how we would split a bash command to multiple lines...

kapak@base:~$ l\

> s -la

total 76

drwxr-xr-x 9 username username 4096 Jul 28 01:24 .

drwxr-xr-x 3 root root 4096 Jul 6 09:49 ..

-rw------- 1 username username 5423 Jul 20 18:10 .bash_history

-rw-r--r-- 1 username username 220 Jul 6 09:49 .bash_logout

-rw-r--r-- 1 username username 3771 Jul 6 09:49 .bashrc

...( Reduced Output ) ...

kapak@base:~$ ls -la

total 76

drwxr-xr-x 9 username username 4096 Jul 28 01:24 .

drwxr-xr-x 3 root root 4096 Jul 6 09:49 ..

-rw------- 1 username username 5423 Jul 20 18:10 .bash_history

-rw-r--r-- 1 username username 220 Jul 6 09:49 .bash_logout

-rw-r--r-- 1 username username 3771 Jul 6 09:49 .bashrc

...( Reduced Output ) ...

In a bash script, we would handle splitting ls -la across multiple lines much the same. Create the file below, name it test.sh -

#/bin/bash

ls -la

l\

s -la

Now make the file executable and run the script, you should see the output of ls -la twice, since this script is a simple example of splitting commands across lines.

# Make the script executable

sudo chmod a+x test.sh

# Run the script

./test.sh

This of course isn't a common use case, but it shows how you can use \ to effectively escape a newline and continue your command on the next line.

Printf Formatting

This is just one of many commands in bash, but you will use it a lot so getting to know the syntax well will make your life a lot easier.

#!/bin/bash

## Author: Shaun Reed | Contact: shaunrd0@gmail.com | URL: www.shaunreed.com ##

## A custom bash script to configure vim with my preferred settings ##

## Run as user with sudo within directory to store / stash .vimrc configs ##

###############################################################################

# Example of easy colorization using printf

GREEN=$(tput setaf 2)

RED=$(tput setaf 1)

UNDERLINE=$(tput smul)

NORMAL=$(tput sgr0)

# Script Reduced, lines removed

# Example of creating an array of strings to be passed to printf

welcome=( "\nEnter 1 to configure vim with the Klips repository, any other value to exit." \

"The up-to-date .vimrc config can be found here: https://github.com/shaunrd0/klips/tree/master/configs" \

"${RED}Configuring Vim with this tool will update / upgrade your packages${NORMAL}\n\n")

# Create a printf format and pass the entire array to it

# Will iterate through array, filling format provided with array contents

# Useful for printing / formatting lists, instructions, etc

printf '%b\n' "${welcome[@]}"

read cChoice

# Script Reduced, lines removed

Using the above method, you could easily create a single array containing multiple responses to related paths the script could take for a related option, and refer to the appropriate index of the array directly, instead of passing all of the contents of the array to the same format.

For more advanced formatting, read the below script carefully, and you will have a basic understanding of how printf can be used dynamically within scripts to provide consistent formatting.

#/bin/bash

divider===============================

divider=$divider$divider

header="\n %-10s %8s %10s %11s\n"

format=" %-10s %08d %10s %11.2f\n"

width=43

printf "$header" "ITEM NAME" "ITEM ID" "COLOR" "PRICE"

printf "%$width.${width}s\n" "$divider"

printf "$format" \

Triangle 13 red 20 \

Oval 204449 "dark blue" 65.656 \

Square 3145 orange .7

# https://linuxconfig.org/bash-printf-syntax-basics-with-examples

String Manipulation

In bash, there are useful features to handle manipulating strings. These strings may be in any format, but the examples below will use strings that refer to directories and files as examples, since this is a common scenario.

#!/bin/bash

local teststring="/home/kapper/Code/"

echo "${teststring#/home/}" # Remove shortest subtring from left matching pattern `/home/` (outputs kapper/Code)

echo "${teststring##*kapper/}" # Remove longest subtring from left matching pattern `*/` (outputs Code/)